Chapter 3. LVM (Logical Volume Manager)

LVM is a tool for logical volume management which includes allocating disks, striping, mirroring and resizing logical volumes.

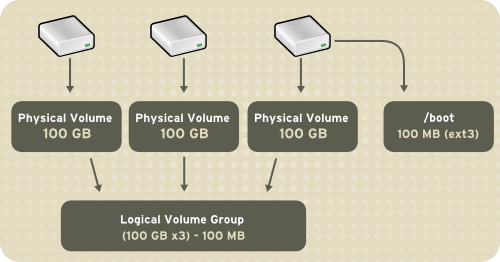

With LVM, a hard drive or set of hard drives is allocated to one or more physical volumes. LVM physical volumes can be placed on other block devices which might span two or more disks.

The physical volumes are combined into logical volumes, with the exception of the /boot/ partition. The /boot/ partition cannot be on a logical volume group because the boot loader cannot read it. If the root (/) partition is on a logical volume, create a separate /boot/ partition which is not a part of a volume group.

Since a physical volume cannot span over multiple drives, to span over

more than one drive, create one or more physical volumes per drive.

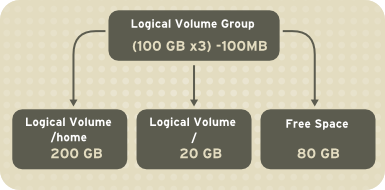

The volume groups can be divided into logical volumes, which are assigned mount points, such as /home and /

and file system types, such as ext2 or ext3. When "partitions" reach

their full capacity, free space from the volume group can be added to

the logical volume to increase the size of the partition. When a new

hard drive is added to the system, it can be added to the volume group,

and partitions that are logical volumes can be increased in size.

On the other hand, if a system is partitioned with the ext3 file

system, the hard drive is divided into partitions of defined sizes. If a

partition becomes full, it is not easy to expand the size of the

partition. Even if the partition is moved to another hard drive, the

original hard drive space has to be reallocated as a different partition

or not used.

This chapter on LVM/LVM2 focuses on the use of the LVM GUI administration tool, i.e. system-config-lvm.

For comprehensive information on the creation and configuration of LVM

partitions in clustered and non-clustered storage, please refer to the Logical Volume Manager Administration guide also provided by Red Hat.

In addition, the Installation Guide for

Red Hat Enterprise Linux 6 also documents how to create and configure

LVM logical volumes during installation. For more information, refer to

the Create LVM Logical Volume section of the Installation Guide for Red Hat Enterprise Linux 6.

LVM version 2, or LVM2, was the default for Red Hat Enterprise Linux

5, which uses the device mapper driver contained in the 2.6 kernel. LVM2

can be upgraded from versions of Red Hat Enterprise Linux running the

2.4 kernel.

3.2. Using system-config-lvm

The LVM utility allows you to manage logical volumes within X windows

or graphically. You can access the application by selecting from your

menu panel > > . Alternatively you can start the Logical Volume Management utility by typing system-config-lvm from a terminal.

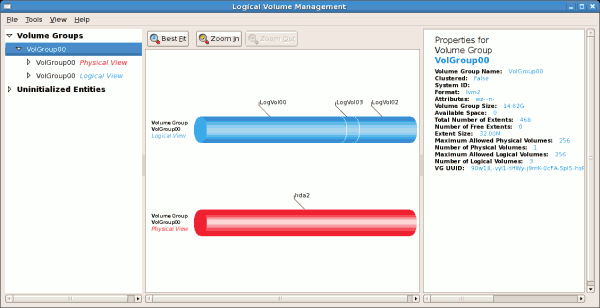

In the example used in this section, the following are the details

for the volume group that was created during the installation:

/boot - (Ext3) file system. Displayed under 'Uninitialized Entities'. (DO NOT initialize this partition).

LogVol00 - (LVM) contains the (/) directory (312 extents).

LogVol02 - (LVM) contains the (/home) directory (128 extents).

LogVol03 - (LVM) swap (28 extents).

The logical volumes above were created in disk entity

/dev/hda2 while

/boot was created in

/dev/hda1. The system also consists of 'Uninitialised Entities' which are illustrated in

Figure 3.7, “Uninitialized Entities”.

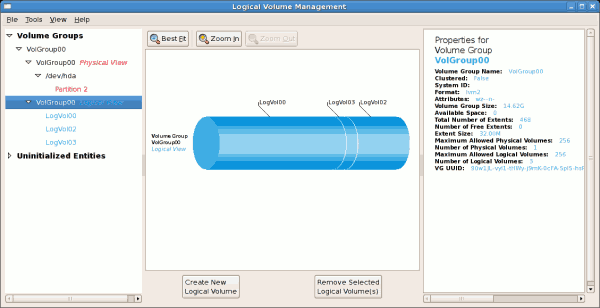

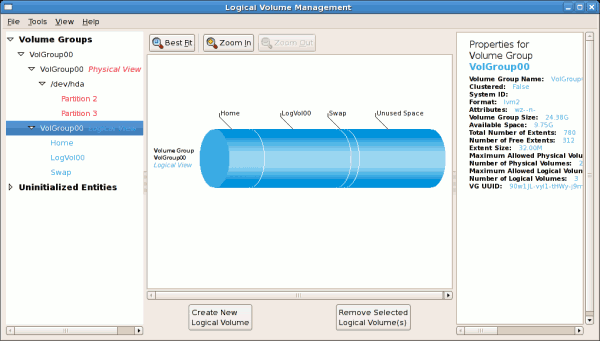

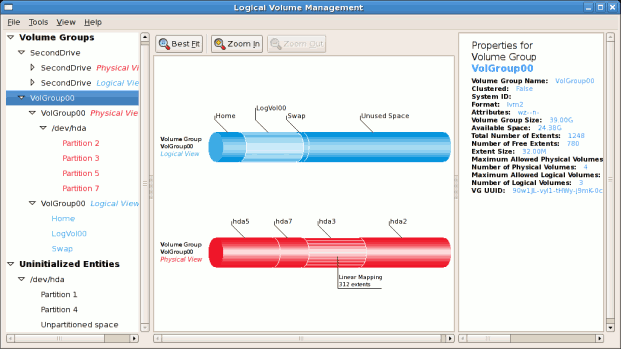

The figure below illustrates the main window in the LVM utility. The

logical and the physical views of the above configuration are

illustrated below. The three logical volumes exist on the same physical

volume (hda2).

Figure 3.3. Main LVM Window

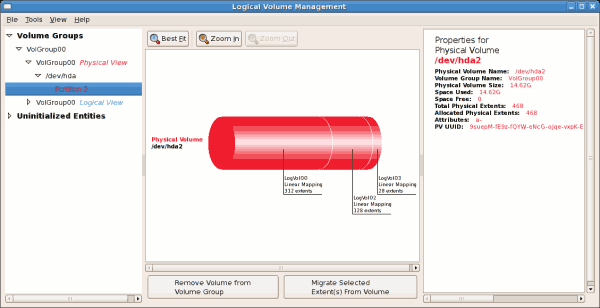

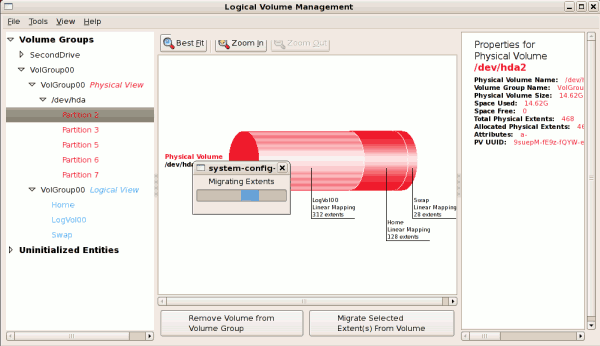

The figure below illustrates the physical view for the volume. In

this window, you can select and remove a volume from the volume group or

migrate extents from the volume to another volume group. Steps to

migrate extents are discussed in

Figure 3.12, “Migrate Extents”.

Figure 3.4. Physical View Window

The figure below illustrates the logical view for the selected volume

group. The logical volume size is also indicated with the individual

logical volume sizes illustrated.

Figure 3.5. Logical View Window

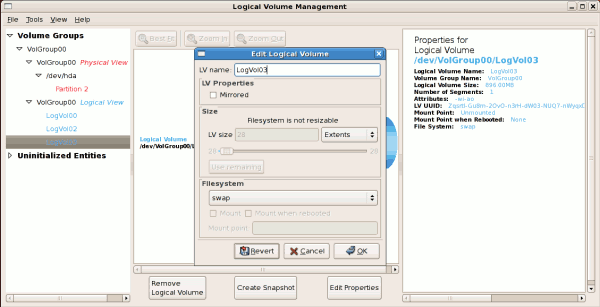

On the left side column, you can select the individual logical

volumes in the volume group to view more details about each. In this

example the objective is to rename the logical volume name for

'LogVol03' to 'Swap'. To perform this operation select the respective

logical volume and click on the Edit Properties

button. This will display the Edit Logical Volume window from which you

can modify the Logical volume name, size (in extents) and also use the

remaining space available in a logical volume group. The figure below

illustrates this.

Please note that this logical volume cannot be changed in size as

there is currently no free space in the volume group. If there was

remaining space, this option would be enabled (see

Figure 3.21, “Edit logical volume”). Click on the

OK button to save your changes (this will remount the volume). To cancel your changes click on the

Cancel button. To revert to the last snapshot settings click on the

Revert button. A snapshot can be created by clicking on the

Create Snapshot button on the LVM utility window. If the selected logical volume is in use by the system (for example) the

/ (root) directory, this task will not be successful as the volume cannot be unmounted.

Figure 3.6. Edit Logical Volume

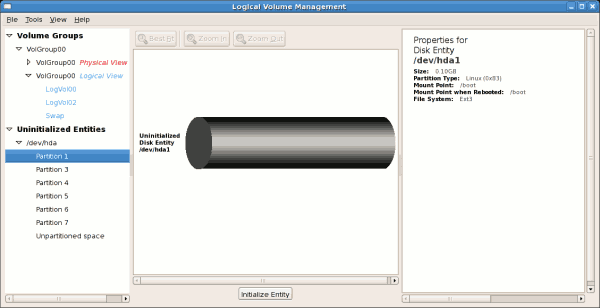

3.2.1. Utilizing Uninitialized Entities

'Uninitialized Entities' consist of unpartitioned space and non LVM

file systems. In this example partitions 3, 4, 5, 6 and 7 were created

during installation and some unpartitioned space was left on the hard

disk. Please view each partition and ensure that you read the

'Properties for Disk Entity' on the right column of the window to ensure

that you do not delete critical data. In this example partition 1

cannot be initialized as it is /boot. Uninitialized entities are illustrated below.

Figure 3.7. Uninitialized Entities

In this example, partition 3 will be initialized and added to an

existing volume group. To initialize a partition or unpartioned space,

select the partition and click on the Initialize Entity button. Once initialized, a volume will be listed in the 'Unallocated Volumes' list.

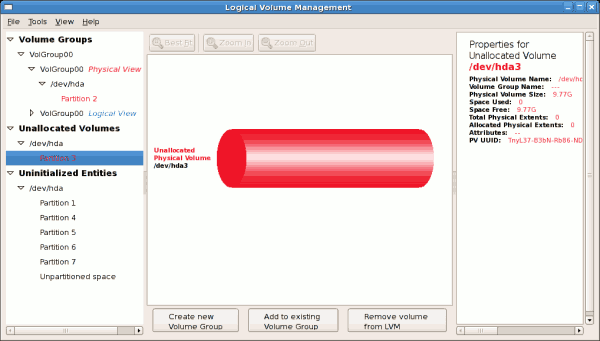

3.2.2. Adding Unallocated Volumes to a Volume Group

Once initialized, a volume will be listed in the 'Unallocated

Volumes' list. The figure below illustrates an unallocated partition

(Partition 3). The respective buttons at the bottom of the window allow

you to:

create a new volume group,

add the unallocated volume to an existing volume group,

remove the volume from LVM.

To add the volume to an existing volume group, click on the

Add to Existing Volume Group button.

Figure 3.8. Unallocated Volumes

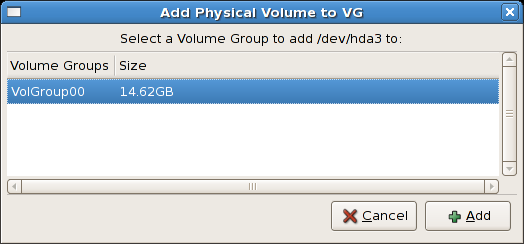

Clicking on the Add to Existing Volume Group

button will display a pop up window listing the existing volume groups

to which you can add the physical volume you are about to initialize. A

volume group may span across one or more hard disks. In this example

only one volume group exists as illustrated below.

Figure 3.9. Add physical volume to volume group

Once added to an existing volume group the new logical volume is

automatically added to the unused space of the selected volume group.

You can use the unused space to:

create a new logical volume (click on the Create New Logical Volume(s) button,

select an existing logical volume and remove it from the volume group by clicking on the Remove Selected Logical Volume(s) button. Please note that you cannot select unused space to perform this operation.

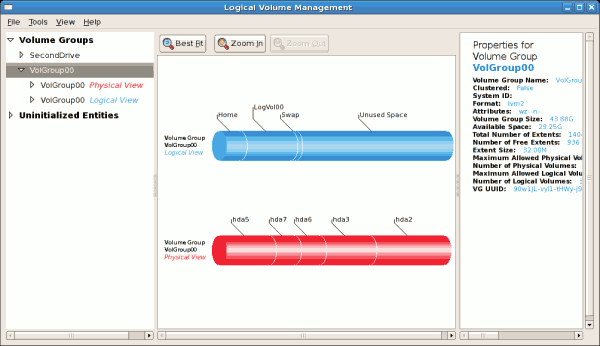

The figure below illustrates the logical view of 'VolGroup00' after adding the new volume group.

Figure 3.10. Logical view of volume group

In the figure below, the uninitialized entities (partitions 3, 5, 6 and 7) were added to 'VolGroup00'.

Figure 3.11. Logical view of volume group

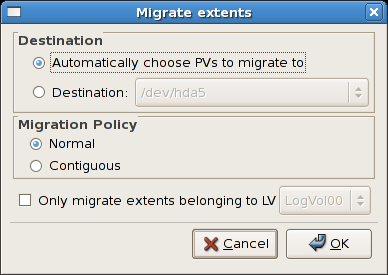

To migrate extents from a physical volume, select the volume and click on the

Migrate Selected Extent(s) From Volume

button. Please note that you need to have a sufficient number of free

extents to migrate extents within a volume group. An error message will

be displayed if you do not have a sufficient number of free extents. To

resolve this problem, please extend your volume group (see

Section 3.2.6, “Extending a Volume Group”).

If a sufficient number of free extents is detected in the volume group,

a pop up window will be displayed from which you can select the

destination for the extents or automatically let LVM choose the physical

volumes (PVs) to migrate them to. This is illustrated below.

Figure 3.12. Migrate Extents

The figure below illustrates a migration of extents in progress. In

this example, the extents were migrated to 'Partition 3'.

Figure 3.13. Migrating extents in progress

Once the extents have been migrated, unused space is left on the

physical volume. The figure below illustrates the physical and logical

view for the volume group. Please note that the extents of LogVol00

which were initially in hda2 are now in hda3. Migrating extents allows

you to move logical volumes in case of hard disk upgrades or to manage

your disk space better.

Figure 3.14. Logical and physical view of volume group

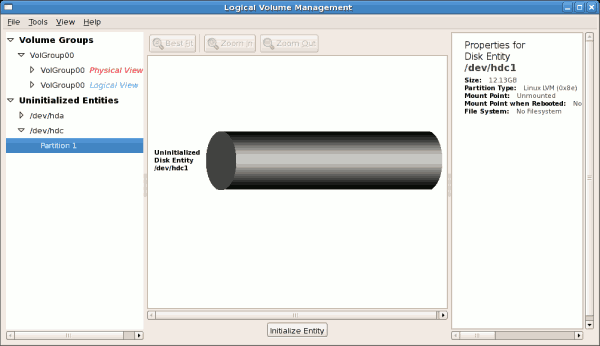

3.2.4. Adding a New Hard Disk Using LVM

In this example, a new IDE hard disk was added. The figure below

illustrates the details for the new hard disk. From the figure below,

the disk is uninitialized and not mounted. To initialize a partition,

click on the

Initialize Entity button. For more details, see

Section 3.2.1, “Utilizing Uninitialized Entities”. Once initialized, LVM will add the new volume to the list of unallocated volumes as illustrated in

Figure 3.16, “Create new volume group”.

Figure 3.15. Uninitialized hard disk

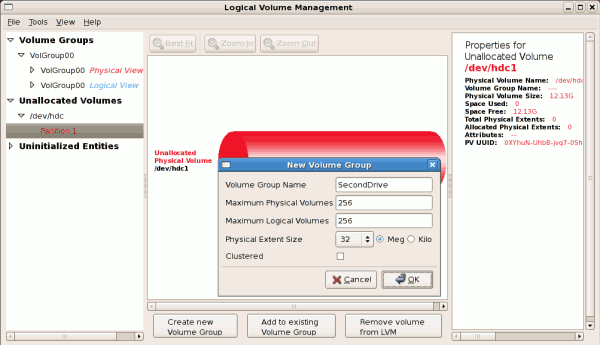

3.2.5. Adding a New Volume Group

Once initialized, LVM will add the new volume to the list of

unallocated volumes where you can add it to an existing volume group or

create a new volume group. You can also remove the volume from LVM. The

volume if removed from LVM will be listed in the list of 'Uninitialized

Entities' as illustrated in

Figure 3.15, “Uninitialized hard disk”. In this example, a new volume group was created as illustrated below.

Figure 3.16. Create new volume group

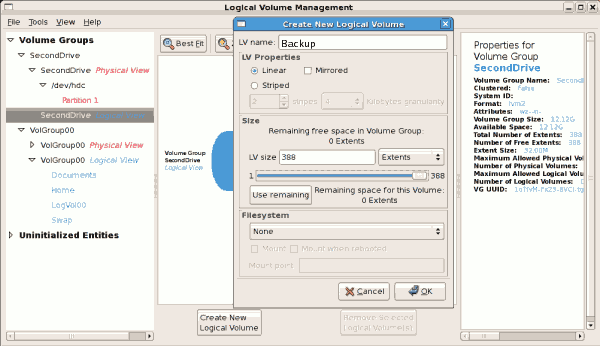

Once created a new volume group will be displayed in the list of

existing volume groups as illustrated below. The logical view will

display the new volume group with unused space as no logical volumes

have been created. To create a logical volume, select the volume group

and click on the Create New Logical Volume

button as illustrated below. Please select the extents you wish to use

on the volume group. In this example, all the extents in the volume

group were used to create the new logical volume.

Figure 3.17. Create new logical volume

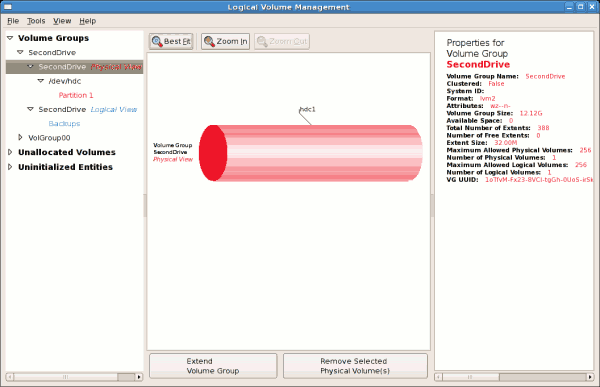

The figure below illustrates the physical view of the new volume

group. The new logical volume named 'Backups' in this volume group is

also listed.

Figure 3.18. Physical view of new volume group

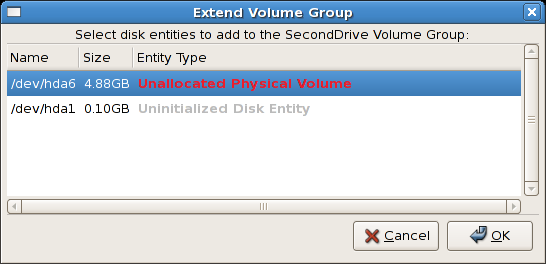

3.2.6. Extending a Volume Group

In this example, the objective was to extend the new volume group to

include an uninitialized entity (partition). This was to increase the

size or number of extents for the volume group. To extend the volume

group, click on the

Extend Volume Group

button. This will display the 'Extend Volume Group' window as

illustrated below. On the 'Extend Volume Group' window, you can select

disk entities (partitions) to add to the volume group. Please ensure

that you check the contents of any 'Uninitialized Disk Entities'

(partitions) to avoid deleting any critical data (see

Figure 3.15, “Uninitialized hard disk”). In the example, the disk entity (partition)

/dev/hda6 was selected as illustrated below.

Figure 3.19. Select disk entities

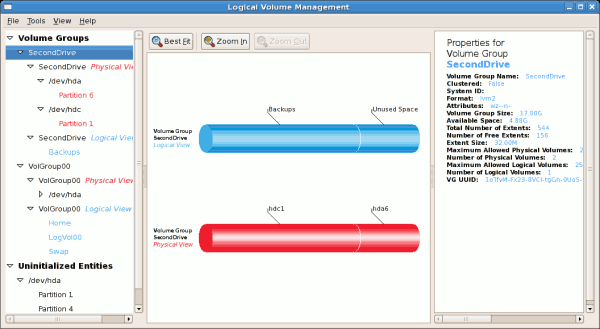

Once added, the new volume will be added as 'Unused Space' in the

volume group. The figure below illustrates the logical and physical view

of the volume group after it was extended.

Figure 3.20. Logical and physical view of an extended volume group

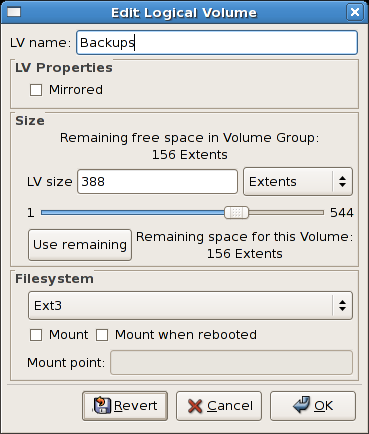

3.2.7. Editing a Logical Volume

The LVM utility allows you to select a logical volume in the volume

group and modify its name, size and specify file system options. In this

example, the logical volume named 'Backups" was extended onto the

remaining space for the volume group.

Clicking on the Edit Properties

button will display the 'Edit Logical Volume' popup window from which

you can edit the properties of the logical volume. On this window, you

can also mount the volume after making the changes and mount it when the

system is rebooted. Please note that you should indicate the mount

point. If the mount point you specify does not exist, a popup window

will be displayed prompting you to create it. The 'Edit Logical Volume'

window is illustrated below.

Figure 3.21. Edit logical volume

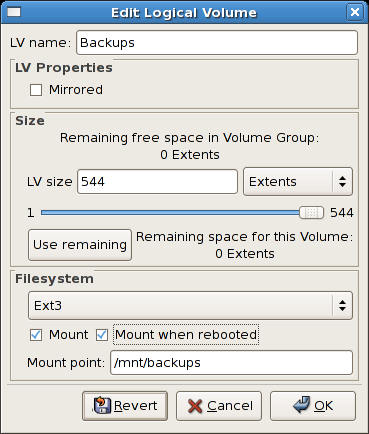

If you wish to mount the volume, select the 'Mount' checkbox

indicating the preferred mount point. To mount the volume when the

system is rebooted, select the 'Mount when rebooted' checkbox. In this

example, the new volume will be mounted in /mnt/backups. This is illustrated in the figure below.

Figure 3.22. Edit logical volume - specifying mount options

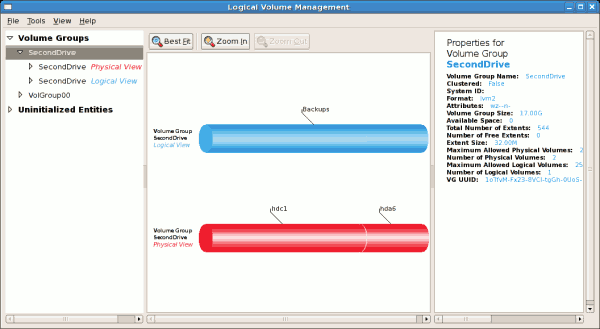

The figure below illustrates the logical and physical view of the

volume group after the logical volume was extended to the unused space.

Please note in this example that the logical volume named 'Backups'

spans across two hard disks. A volume can be stripped across two or more

physical devices using LVM.

Figure 3.23. Edit logical volume

Use these sources to learn more about LVM.

rpm -qd lvm2 — This command shows all the documentation available from the lvm package, including man pages.

lvm help — This command shows all LVM commands available.

The utility

parted allows users to:

View the existing partition table

Change the size of existing partitions

Add partitions from free space or additional hard drives

By default, the parted package is included when installing Red Hat Enterprise Linux. To start parted, log in as root and type the command parted /dev/sda at a shell prompt (where /dev/sda

If you want to remove or resize a partition, the device on which that

partition resides must not be in use. Creating a new partition on a

device which is in use—while possible—is not recommended.

For a device to not be in use, none of the partitions on the device

can be mounted, and any swap space on the device must not be enabled.

As well, the partition table should not be modified while it is in use

because the kernel may not properly recognize the changes. If the

partition table does not match the actual state of the mounted

partitions, information could be written to the wrong partition,

resulting in lost and overwritten data.

The easiest way to achieve this it to boot your system in rescue mode. When prompted to mount the file system, select Skip.

Alternately, if the drive does not contain any partitions in use

(system processes that use or lock the file system from being

unmounted), you can unmount them with the umount command and turn off all the swap space on the hard drive with the swapoff command.

Table 4.1, “parted commands” contains a list of commonly used

parted commands. The sections that follow explain some of these commands and arguments in more detail.

Table 4.1. parted commands

|

Command

|

Description

|

|---|

check minor-num

|

Perform a simple check of the file system

|

cp from to

|

Copy file system from one partition to another; from and to are the minor numbers of the partitions

|

help

|

Display list of available commands

|

mklabel label

|

Create a disk label for the partition table

|

mkfs minor-num file-system-type

|

Create a file system of type file-system-type

|

mkpart part-type fs-type start-mb end-mb

|

Make a partition without creating a new file system

|

mkpartfs part-type fs-type start-mb end-mb

|

Make a partition and create the specified file system

|

move minor-num start-mb end-mb

|

Move the partition

|

name minor-num name

|

Name the partition for Mac and PC98 disklabels only

|

print

|

Display the partition table

|

quit

|

Quit parted

|

rescue start-mb end-mb

|

Rescue a lost partition from start-mb to end-mb

|

resize minor-num start-mb end-mb

|

Resize the partition from start-mb to end-mb

|

rm minor-num

|

Remove the partition

|

select device

|

Select a different device to configure

|

set minor-num flag state

|

Set the flag on a partition; state is either on or off

|

toggle [NUMBER [FLAG]

|

Toggle the state of FLAG on partition NUMBER

|

unit UNIT

|

Set the default unit to UNIT

|

4.1. Viewing the Partition Table

After starting parted, use the command print to view the partition table. A table similar to the following appears:

Model: ATA ST3160812AS (scsi)

Disk /dev/sda: 160GB

Sector size (logical/physical): 512B/512B

Partition Table: msdos

Number Start End Size Type File system Flags

1 32.3kB 107MB 107MB primary ext3 boot

2 107MB 105GB 105GB primary ext3

3 105GB 107GB 2147MB primary linux-swap

4 107GB 160GB 52.9GB extended root

5 107GB 133GB 26.2GB logical ext3

6 133GB 133GB 107MB logical ext3

7 133GB 160GB 26.6GB logical lvm

The first line contains the disk type, manufacturer, model number and

interface, and the second line displays the disk label type. The

remaining output below the fourth line shows the partition table.

In the partition table, the Minor number is the partition number. For example, the partition with minor number 1 corresponds to /dev/sda1. The Start and End values are in megabytes. Valid Type are metadata, free, primary, extended, or logical. The Filesystem is the file system type, which can be any of the following:

ext2

ext3

fat16

fat32

hfs

jfs

linux-swap

ntfs

reiserfs

hp-ufs

sun-ufs

xfs

If a Filesystem of a device shows no value, this means that its file system type is unknown.

The Flags column lists the flags set for the partition. Available flags are boot, root, swap, hidden, raid, lvm, or lba.

To select a different device without having to restart parted, use the select command followed by the device name (for example, /dev/sda). Doing so allows you to view or configure the partition table of a device.

4.2. Creating a Partition

Do not attempt to create a partition on a device that is in use.

Before creating a partition, boot into rescue mode (or unmount any

partitions on the device and turn off any swap space on the device).

Start parted, where /dev/sda is the device on which to create the partition:

parted /dev/sda

View the current partition table to determine if there is enough free space:

print

4.2.1. Making the Partition

From the partition table, determine the start and end points of the

new partition and what partition type it should be. You can only have

four primary partitions (with no extended partition) on a device. If you

need more than four partitions, you can have three primary partitions,

one extended partition, and multiple logical partitions within the

extended. For an overview of disk partitions, refer to the appendix An Introduction to Disk Partitions in the Red Hat Enterprise Linux 6 Installation Guide.

For example, to create a primary partition with an ext3 file system

from 1024 megabytes until 2048 megabytes on a hard drive type the

following command:

mkpart primary ext3 1024 2048

If you use the mkpartfs command instead, the file system is created after the partition is created. However, parted does not support creating an ext3 file system. Thus, if you wish to create an ext3 file system, use mkpart and create the file system with the mkfs command as described later.

The changes start taking place as soon as you press Enter, so review the command before executing to it.

After creating the partition, use the print

command to confirm that it is in the partition table with the correct

partition type, file system type, and size. Also remember the minor

number of the new partition so that you can label any file systems on

it. You should also view the output of cat /proc/partitions to make sure the kernel recognizes the new partition.

4.2.2. Formatting and Labeling the Partition

The partition still does not have a file system. Create the file system:

/sbin/mkfs -t ext3 /dev/sda6

Formatting the partition permanently destroys any data that currently exists on the partition.

Next, give the file system on the partition a label. For example, if the file system on the new partition is /dev/sda6 and you want to label it /work, use:

e2label /dev/sda6 /work

By default, the installation program uses the mount point of the

partition as the label to make sure the label is unique. You can use any

label you want.

Afterwards, create a mount point (e.g. /work) as root.

As root, edit the /etc/fstab file to include the new partition using the partition's UUID. Use the blkid -L label command to retrieve the partition's UUID. The new line should look similar to the following:

UUID=93a0429d-0318-45c0-8320-9676ebf1ca79 /work ext3 defaults 1 2

The first column should contain UUID=

followed by the file system's UUID. The second column should contain

the mount point for the new partition, and the next column should be the

file system type (for example, ext3 or swap). If you need more

information about the format, read the man page with the command man fstab.

If the fourth column is the word defaults, the partition is mounted at boot time. To mount the partition without rebooting, as root, type the command:

mount /work

4.3. Removing a Partition

Do not attempt to remove a partition on a device that is in use.

Before removing a partition, boot into rescue mode (or unmount any

partitions on the device and turn off any swap space on the device).

Start parted, where /dev/sda is the device on which to remove the partition:

parted /dev/sda

View the current partition table to determine the minor number of the partition to remove:

print

Remove the partition with the command rm. For example, to remove the partition with minor number 3:

rm 3

The changes start taking place as soon as you press Enter, so review the command before committing to it.

After removing the partition, use the print command to confirm that it is removed from the partition table. You should also view the output of

cat /proc/partitions

to make sure the kernel knows the partition is removed.

The last step is to remove it from the /etc/fstab file. Find the line that declares the removed partition, and remove it from the file.

4.4. Resizing a Partition

Do not attempt to resize a partition on a device that is in use.

Before resizing a partition, boot into rescue mode (or unmount any

partitions on the device and turn off any swap space on the device).

Start parted, where /dev/sda is the device on which to resize the partition:

parted /dev/sda

View the current partition table to determine the minor number of the

partition to resize as well as the start and end points for the

partition:

print

To resize the partition, use the resize

command followed by the minor number for the partition, the starting

place in megabytes, and the end place in megabytes. For example:

resize 3 1024 2048

A partition cannot be made larger than the space available on the device

After resizing the partition, use the print

command to confirm that the partition has been resized correctly, is

the correct partition type, and is the correct file system type.

After rebooting the system into normal mode, use the command df to make sure the partition was mounted and is recognized with the new size.

Chapter 6. The Ext3 File System

The ext3 file system is essentially an enhanced version of the ext2

file system. These improvements provide the following advantages:

- Availability

After an unexpected power failure or system crash (also called an unclean system shutdown), each mounted ext2 file system on the machine must be checked for consistency by the e2fsck

program. This is a time-consuming process that can delay system boot

time significantly, especially with large volumes containing a large

number of files. During this time, any data on the volumes is

unreachable.

The journaling provided by the ext3 file system means that this

sort of file system check is no longer necessary after an unclean system

shutdown. The only time a consistency check occurs using ext3 is in

certain rare hardware failure cases, such as hard drive failures. The

time to recover an ext3 file system after an unclean system shutdown

does not depend on the size of the file system or the number of files;

rather, it depends on the size of the journal used to maintain consistency. The default journal size takes about a second to recover, depending on the speed of the hardware.

The only supported journaling mode in ext3 is data=ordered (default).

- Data Integrity

The ext3 file system prevents loss of data integrity in the event

that an unclean system shutdown occurs. The ext3 file system allows you

to choose the type and level of protection that your data receives. By

default, the ext3 volumes are configured to keep a high level of data

consistency with regard to the state of the file system.

- Speed

Despite writing some data more than once, ext3 has a higher

throughput in most cases than ext2 because ext3's journaling optimizes

hard drive head motion. You can choose from three journaling modes to

optimize speed, but doing so means trade-offs in regards to data

integrity if the system was to fail.

- Easy Transition

The Red Hat Enterprise Linux 6 version of ext3 features the following updates:

The default size of the on-disk inode has increased for more

efficient storage of extended attributes, for example ACLs or SELinux

attributes. Along with this change, the default number of inodes created

on a file system of a given size has been decreased. The inode size may

be selected with the mke2fs -I option, or specified in /etc/mke2fs.conf to set system-wide defaults for mke2fs.

If you upgrade to Red Hat Enterprise Linux 6 with the intention of

keeping any ext3 file systems intact, you do not need to remake the file

system.

A new mount option has been added: data_err=abort. This option instructs ext3 to abort the journal if an error occurs in a file data (as opposed to metadata) buffer in data=ordered mode. This option is disabled by default (i.e. set as data_err=ignore).

When creating a file system (i.e. mkfs), mke2fs

will attempt to "discard" or "trim" blocks not used by the file system

metadata. This helps to optimize SSDs or thinly-provisioned storage. To

suppress this behavior, use the mke2fs -K option.

6.1. Creating an Ext3 File System

After installation, it is sometimes necessary to create a new ext3

file system. For example, if you add a new disk drive to the system, you

may want to partition the drive and use the ext3 file system.

The steps for creating an ext3 file system are as follows:

Format the partition with the ext3 file system using mkfs.

Label the file system using e2label.

6.2. Converting to an Ext3 File System

The tune2fs allows you to convert an ext2 file system to ext3.

Always use the e2fsck utility to check your file system before and after using tune2fs.

A default installation of Red Hat Enterprise Linux uses ext4 for all

file systems. Before trying to convert, back up all your file systems in

case any errors occur.

In addition, Red Hat recommends that you should, whenever possible,

create a new ext3 file system and migrate your data to it instead of

converting from ext2 to ext3.

To convert an ext2 file system to ext3, log in as root and type the following command in a terminal:

tune2fs -j block_device

where block_device contains the ext2 file system you wish to convert.

A valid block device could be one of two types of entries:

A mapped device — A logical volume in a volume group, for example, /dev/mapper/VolGroup00-LogVol02.

A static device — A traditional storage volume, for example, /dev/sdbX, where sdb is a storage device name and X is the partition number.

Issue the df command to display mounted file systems.

6.3. Reverting to an Ext2 File System

For simplicity, the sample commands in this section use the following value for the block device:

/dev/mapper/VolGroup00-LogVol02

If you wish to revert a partition from ext3 to ext2 for any reason,

you must first unmount the partition by logging in as root and typing,

umount /dev/mapper/VolGroup00-LogVol02

Next, change the file system type to ext2 by typing the following command as root:

tune2fs -O ^has_journal /dev/mapper/VolGroup00-LogVol02

Check the partition for errors by typing the following command as root:

e2fsck -y /dev/mapper/VolGroup00-LogVol02

Then mount the partition again as ext2 file system by typing:

mount -t ext2 /dev/mapper/VolGroup00-LogVol02 /mount/point

In the above command, replace /mount/point with the mount point of the partition.

If a .journal file exists at the root level of the partition, delete it.

You now have an ext2 partition.

If you want to permanently change the partition to ext2, remember to update the /etc/fstab file.

Chapter 7.

The Ext4 File System

The ext4 file system is a scalable extension of the ext3 file system,

which was the default file system of Red Hat Enterprise Linux 5. Ext4 is

now the default file system of Red Hat Enterprise Linux 6, and can

support files and file systems of up to 16 terabytes in size. It also

supports an unlimited number of sub-directories (the ext3 file system

only supports up to 32,000).

- Main Features

Ext4 uses extents (as opposed to the traditional block mapping

scheme used by ext2 and ext3), which improves performance when using

large files and reduces metadata overhead for large files. In addition,

ext4 also labels unallocated block groups and inode table sections

accordingly, which allows them to be skipped during a file system check.

This makes for quicker file system checks, which becomes more

beneficial as the file system grows in size.

- Allocation Features

The ext4 file system features the following allocation schemes:

Because of delayed allocation and other performance optimizations,

ext4's behavior of writing files to disk is different from ext3. In

ext4, a program's writes to the file system are not guaranteed to be

on-disk unless the program issues an fsync() call afterwards.

By default, ext3 automatically forces newly created files to disk almost immediately even without fsync(). This behavior hid bugs in programs that did not use fsync()

to to ensure that written data was on-disk. The ext4 file system, on

the other hand, often waits several seconds to write out changes to

disk, allowing it to combine and reorder writes for better disk

performance than ext3

Unlike ext3, the ext4 file system does not force data to disk on

transaction commit. As such, it takes longer for buffered writes to be

flushed to disk. As with any file system, use data integrity calls such

as fsync() to ensure that data is written to permanent storage.

- Other Ext4 Features

The Ext4 file system also supports the following:

Extended attributes (xattr), which allows the system to associate several additional name/value pairs per file.

Quota journaling, which avoids the need for lengthy quota consistency checks after a crash.

The only supported journaling mode in ext4 is data=ordered (default).

Subsecond timestamps

7.1. Creating an Ext4 File System

To create an ext4 file system, use the mkfs.ext4 command. In general, the default options are optimal for most usage scenarios, as in:

mkfs.ext4 /dev/device

Below is a sample output of this command, which displays the resulting file system geometry and features:

mke2fs 1.41.9 (22-Aug-2009)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

1954064 inodes, 7813614 blocks

390680 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=4294967296

239 block groups

32768 blocks per group, 32768 fragments per group

8176 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

For striped block devices (e.g. RAID5 arrays), the stripe geometry

can be specified at the time of file system creation. Using proper

stripe geometry greatly enhances performance of an ext4 file system.

When creating file systems on lvm or md volumes, mkfs.ext4

chooses an optimal geometry. This may also be true on some hardware

RAIDs which export geometry information to the operating system.

To specify stripe geometry, use the -E option of mkfs.ext4 (i.e. extended file system options) with the following sub-options:

- stride=

value

Specifies the RAID chunk size.

- stripe-width=

value

Specifies the number of data disks in a RAID device, or the number of stripe units in the stripe.

For both sub-options, value

mkfs.ext4 -E stride=16,stripe-width=64 /dev/device

For more information about creating file systems, refer to man mkfs.ext4.

It is possible to use tune2fs to enable

some ext4 features on ext3 file systems, and to use the ext4 driver to

mount an ext3 file system. These actions, however, are not

supported in Red Hat Enterprise Linux 6, as they have not been fully

tested. Because of this, Red Hat cannot guarantee consistent performance

and predictable behavior for ext3 file systems converted or mounted

thusly.

7.2. Mounting an Ext4 File System

An ext4 file system can be mounted with no extra options. For example:

mount /dev/device /mount/point

The ext4 file system also supports several mount options to influence behavior. For example, the acl parameter enables access control lists, while the user_xattr parameter enables user extended attributes. To enable both options, use their respective parameters with -o, as in:

mount -o acl,user_xattr /dev/device /mount/point

The tune2fs utility also allows

administrators to set default mount options in the file system

superblock. For more information on this, refer to man tune2fs.

By default, ext4 uses write barriers to ensure file system integrity

even when power is lost to a device with write caches enabled. For

devices without write caches, or with battery-backed write caches,

disable barriers using the nobarrier option, as in:

mount -o nobarrier /dev/device /mount/point

7.3.

Resizing an Ext4 File System

Before growing an ext4 file system, ensure that the underlying block

device is of an appropriate size to hold the file system later. Use the

appropriate resizing methods for the affected block device.

An ext4 file system may be grown while mounted using the resize2fs command, as in:

resize2fs /mount/point size

The resize2fs command can also decrease the size of an unmounted ext4 file system, as in:

resize2fs /dev/device size

When resizing an ext4 file system, the resize2fs

utility reads the size in units of file system block size, unless a

suffix indicating a specific unit is used. The following suffixes

indicate specific units:

s — 512kb sectors

K — kilobytes

M — megabytes

G — gigabytes

For more information about resizing an ext4 file system, refer to man resize2fs.

7.4. Other Ext4 File System Utilities

Red Hat Enterprise Linux 6 also features other utilities for managing ext4 file systems:

- e2fsck

Used to repair an ext4 file system. This tool checks and repairs

an ext4 file system more efficiently than ext3, thanks to updates in the

ext4 disk structure.

- e2label

Changes the label on an ext4 file system. This tool can also works on ext2 and ext3 file systems.

- quota

Controls and reports on disk space (blocks) and file (inode) usage

by users and groups on an ext4 file system. For more information on

using

quota, refer to

man quota and

Section 15.1, “Configuring Disk Quotas”.

As demonstrated earlier in

Section 7.2, “Mounting an Ext4 File System”, the

tune2fs

utility can also adjust configurable file system parameters for ext2,

ext3, and ext4 file systems. In addition, the following tools are also

useful in debugging and analyzing ext4 file systems:

- debugfs

Debugs ext2, ext3, or ext4 file systems.

- e2image

Saves critical ext2, ext3, or ext4 file system metadata to a file.

For more information about these utilities, refer to their respective man pages.

Chapter 8. Global File System 2

The Red Hat GFS2 file system is a native file system that interfaces

directly with the Linux kernel file system interface (VFS layer). When

implemented as a cluster file system, GFS2 employs distributed metadata

and multiple journals.

GFS2 is based on a 64-bit architecture, which can theoretically

accommodate an 8 EB file system. However, the current supported maximum

size of a GFS2 file system is 100 TB. If your system requires GFS2 file

systems larger than 100 TB, contact your Red Hat service representative.

When determining the size of your file system, you should consider your recovery needs. Running the fsck

command on a very large file system can take a long time and consume a

large amount of memory. Additionally, in the event of a disk or

disk-subsytem failure, recovery time is limited by the speed of your

backup media.

When configured in a Red Hat Cluster Suite, Red Hat GFS2 nodes can be

configured and managed with Red Hat Cluster Suite configuration and

management tools. Red Hat GFS2 then provides data sharing among GFS2

nodes in a Red Hat cluster, with a single, consistent view of the file

system name space across the GFS2 nodes. This allows processes on

different nodes to share GFS2 files in the same way that processes on

the same node can share files on a local file system, with no

discernible difference. For information about the Red Hat Cluster Suite,

refer to Configuring and Managing a Red Hat Cluster.

A GFS2 file system must be created on an LVM logical volume that is a

linear or mirrored volume. LVM logical volumes in a Red Hat Cluster

suite are managed with CLVM, which is a cluster-wide implementation of

LVM, enabled by the CLVM daemon, clvmd,

running in a Red Hat Cluster Suite cluster. The daemon makes it possible

to use LVM2 to manage logical volumes across a cluster, allowing all

nodes in the cluster to share the logical volumes. For information on

the LVM volume manager, see Logical Volume Manager Administration.

The gfs2.ko kernel module implements the GFS2 file system and is loaded on GFS2 cluster nodes.

For comprehensive information on the creation and configuration of

GFS2 file systems in clustered and non-clustered storage, please refer

to the Global File System 2 guide also provided by Red Hat.

Chapter 9.

The XFS File System

XFS is a highly scalable, high-performance file system which was

originally designed at Silicon Graphics, Inc. It was created to support

extremely large filesystems (up to 16 exabytes), files (8 exabytes) and

directory structures (tens of millions of entries).

- Main Features

XFS supports metadata journaling, which

facilitates quicker crash recovery. The XFS file system can also be

defragmented and enlarged while mounted and active. In addition, Red Hat

Enterprise Linux 6 supports backup and restore utilities specific to

XFS.

- Allocation Features

XFS features the following allocation schemes:

Delayed allocation and other performance optimizations affect XFS

the same way that they do ext4. Namely, a program's writes to a an XFS

file system are not guaranteed to be on-disk unless the program issues

an fsync() call afterwards.

For more information on the implications of delayed allocation on a file system, refer to

Allocation Features in

Chapter 7,

The Ext4 File System. The workaround for ensuring writes to disk applies to XFS as well.

- Other XFS Features

The XFS file system also supports the following:

Extended attributes (xattr), which allows the system to associate several additional name/value pairs per file.

Quota journalling, which avoids the need for lengthy quota consistency checks after a crash.

Project/directory quotas, allowing quota restrictions over a directory tree.

Subsecond timestamps

9.1. Creating an XFS File System

To create an XFS file system, use the mkfs.xfs /dev/device command. In general, the default options are optimal for common use.

When using mkfs.xfs on a block device containing an existing file system, use the -f option to force an overwrite of that file system.

Below is a sample output of the mkfs.xfs command:

meta-data=/dev/device isize=256 agcount=4, agsize=3277258 blks

= sectsz=512 attr=2

data = bsize=4096 blocks=13109032, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0

log =internal log bsize=4096 blocks=6400, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

For striped block devices (e.g., RAID5 arrays), the stripe geometry

can be specified at the time of file system creation. Using proper

stripe geometry greatly enhances the performance of an XFS filesystem.

When creating filesystems on lvm or md volumes, mkfs.xfs

chooses an optimal geometry. This may also be true on some hardware

RAIDs which export geometry information to the operating system.

To specify stripe geometry, use the following mkfs.xfs sub-options:

- su=

value

Specifies a stripe unit or RAID chunk size. The valuek, m, or g suffix.

- sw=

value

Specifies the number of data disks in a RAID device, or the number of stripe units in the stripe.

The following example specifies a chunk size of 64k on a RAID device containing 4 stripe units:

mkfs.xfs -d su=64k,sw=4 /dev/device

For more information about creating XFS file systems, refer to man mkfs.xfs.

9.2. Mounting an XFS File System

An XFS file system can be mounted with no extra options, for example:

mount /dev/device /mount/point

XFS also supports several mount options to influence behavior.

By default, XFS allocates inodes to reflect their on-disk location.

However, because some 32-bit userspace applications are not compatible

with inode numbers greater than 232, XFS will allocate all

inodes in disk locations which result in 32-bit inode numbers. This can

lead to decreased performance on very large filesystems (i.e. larger

than 2 terabytes), because inodes are skewed to the beginning of the

block device, while data is skewed towards the end.

To address this, use the inode64 mount

option. This option configures XFS to allocate inodes and data across

the entire file system, which can improve performance:

mount -o inode64 /dev/device /mount/point

By default, XFS uses write barriers to ensure file system integrity

even when power is lost to a device with write caches enabled. For

devices without write caches, or with battery-backed write caches,

disable barriers using the nobarrier option, as in:

mount -o nobarrier /dev/device /mount/point

9.3. XFS Quota Management

The XFS quota subsystem manages limits on disk space (blocks) and

file (inode) usage. XFS quotas control and/or report on usage of these

items on a user, group, or directory/project level. Also, note that

while user, group, and directory/project quotas are enabled

independently, group and project quotas a mutually exclusive.

When managing on a per-directory or per-project basis, XFS manages

the disk usage of directory heirarchies associated with a specific

project. In doing so, XFS recognizes cross-organizational "group"

boundaries between projects. This provides a level of control that is

broader than what is available when managing quotas for users or groups.

XFS quotas are enabled at mount time, with specific mount options. Each mount option can also be specified as noenforce; this will allow usage reporting without enforcing any limits. Valid quota mount options are:

uquota/uqnoenforce - User quotas

gquota/gqnoenforce - Group quotas

pquota/pqnoenforce - Project quota

Once quotas are enabled, the xfs_quota tool can be used to set limits and report on disk usage. By default, xfs_quota is run interactively, and in basic mode. Basic mode sub-commands simply report usage, and are available to all users. Basic xfs_quota sub-commands include:

- quota

username/userID

Show usage and limits for the given usernameuserID

- df

Shows free and used counts for blocks and inodes.

In contrast, xfs_quota also has an expert mode.

The sub-commands of this mode allow actual configuration of limits, and

are available only to users with elevated privileges. To use expert

mode sub-commands interactively, run xfs_quota -x. Expert mode sub-commands include:

- report

/path

Reports quota information for a specific file system.

- limit

Modify quota limits.

For a complete list of sub-commands for either basic or expert mode, use the sub-command help.

All sub-commands can also be run directly from a command line using the -c option, with -x for expert sub-commands. For example, to display a sample quota report for /home (on /dev/blockdevice), use the command xfs_quota -cx 'report -h' /home. This will display output similar to the following:

User quota on /home (/dev/blockdevice)

Blocks

User ID Used Soft Hard Warn/Grace

---------- ---------------------------------

root 0 0 0 00 [------]

testuser 103.4G 0 0 00 [------]

...

To set a soft and hard inode count limit of 500 and 700 respectively for user john (whose home directory is /home/john), use the following command:

xfs_quota -x -c 'limit isoft=500 ihard=700 /home/john'

By default, the limit sub-command recognizes targets as users. When configuring the limits for a group, use the -g option (as in the previous example). Similarly, use -p for projects.

Soft and hard block limits can also be configured using bsoft/bhard instead of isoft/ihard. For example, to set a soft and hard block limit of 1000m and 1200m, respectively, to group accounting on the /target/path file system, use the following command:

xfs_quota -x -c 'limit -g bsoft=1000m bhard=1200m accounting' /target/path

While real-time blocks (rtbhard/rtbsoft) are described in man xfs_quota as valid units when setting quotas, the real-time sub-volume is not enabled in this release. As such, the rtbhard and rtbsoft options are not applicable.

Before configuring limits for project-controlled directories, add them first to /etc/projects. Project names can be added to/etc/projectid to map project IDs to project names. Once a project is added to /etc/projects, initialize its project directory using the following command:

xfs_quota -c 'project -s projectname'

Quotas for projects with initialized directories can then be configured, as in:

xfs_quota -x -c 'limit -p bsoft=1000m bhard=1200m projectname'

Generic quota configuration tools (e.g. quota, repquota, edquota) may also be used to manipulate XFS quotas. However, these tools cannot be used with XFS project quotas.

For more information about setting XFS quotas, refer to man xfs_quota.

9.4. Increasing the Size of an XFS File System

An XFS file system may be grown while mounted using the xfs_growfs command, as in:

xfs_growfs /mount/point -D size

The -D size option grows the file system to the specified size-D size option, xfs_growfs will grow the file system to the maximum size supported by the device.

Before growing an XFS file system with -D size,

ensure that the underlying block device is of an appropriate size to

hold the file system later. Use the appropriate resizing methods for the

affected block device.

While XFS file systems can be grown while mounted, their size cannot be reduced at all.

For more information about growing a file system, refer to man xfs_growfs.

9.5.

Repairing an XFS File System

To repair an XFS file system, use xfs_repair, as in:

xfs_repair /dev/device

The xfs_repair utility is highly

scalable, and is designed to repair even very large file systems with

many inodes efficiently. Note that unlike other Linux file systems, xfs_repair does not run at boot time, even when an XFS file system was not cleanly unmounted. In the event of an unclean unmount, xfs_repair simply replays the log at mount time, ensuring a consistent file system.

The xfs_repair utility cannot repair an

XFS file system with a dirty log. To clear the log, mount and unmount

the XFS file system. If the log is corrupt and cannot be replayed, use

the -L option ("force log zeroing") to clear the log, i.e. xfs_repair -L /dev/device. Note, however, that this may result in further corruption or data loss.

For more information about repairing an XFS file system, refer to man xfs_repair.

9.6. Suspending an XFS File System

To suspend or resume write activity to a file system, use xfs_freeze. Suspending write activity allows hardware-based device snapshots to be used to capture the file system in a consistent state.

The xfs_freeze utility is provided by the xfsprogs package, which is only available on x86_64.

To suspend (i.e. freeze) an XFS file system, use:

xfs_freeze -f /mount/point

To unfreeze an XFS file system, use:

xfs_freeze -u /mount/point

When taking an LVM snapshot, it is not necessary to use xfs_freeze

to suspend the file system first. Rather, the LVM management tools will

automatically suspend the XFS file system before taking the snapshot.

You can also use the xfs_freeze utility to freeze/unfreeze an ext3, ext4, GFS2, XFS, and BTRFS, file system. The syntax for doing so is also the same.

For more information about freezing and unfreezing an XFS file system, refer to man xfs_freeze.

9.7.

Backup and Restoration of XFS File Systems

XFS file system backup and restoration involves two utilities: xfsdump and xfsrestore.

To backup or dump an XFS file system, use the xfsdump

utility. Red Hat Enterprise Linux 6 supports backups to tape drives or

regular file images, and also allows multiple dumps to be written to the

same tape. The xfsdump utility also allows a dump to span multiple tapes, although only one dump can be written to a regular file. In addition, xfsdump supports incremental backups, and can exclude files from a backup using size, subtree, or inode flags to filter them.

In order to support incremental backups, xfsdump uses dump levels to determine a base dump to which a specific dump is relative. The -l option specifies a dump level (0-9). To perform a full backup, perform a level 0 dump on the file system (i.e. /path/to/filesystem

xfsdump -l 0 -f /dev/device /path/to/filesystem

The -f option specifies a destination for a backup. For example, the /dev/st0 destination is normally used for tape drives. An xfsdump destination can be a tape drive, regular file, or remote tape device.

In contrast, an incremental backup will only dump files that changed since the last level 0 dump. A level 1 dump is the first incremental dump after a full dump; the next incremental dump would be level 2, and so on, to a maximum of level 9. So, to perform a level 1 dump to a tape drive:

xfsdump -l 1 -f /dev/st0 /path/to/filesystem

Conversely, the xfsrestore utility restores file systems from dumps produced by xfsdump. The xfsrestore utility has two modes: a default simple mode, and a cumulative mode. Specific dumps are identified by session ID or session label.

As such, restoring a dump requires its corresponding session ID or

label. To display the session ID and labels of all dumps (both full and

incremental), use the -I option, as in:

xfsrestore -I

This will provide output similar to the following:

file system 0:

fs id: 45e9af35-efd2-4244-87bc-4762e476cbab

session 0:

mount point: bear-05:/mnt/test

device: bear-05:/dev/sdb2

time: Fri Feb 26 16:55:21 2010

session label: "my_dump_session_label"

session id: b74a3586-e52e-4a4a-8775-c3334fa8ea2c

level: 0

resumed: NO

subtree: NO

streams: 1

stream 0:

pathname: /mnt/test2/backup

start: ino 0 offset 0

end: ino 1 offset 0

interrupted: NO

media files: 1

media file 0:

mfile index: 0

mfile type: data

mfile size: 21016

mfile start: ino 0 offset 0

mfile end: ino 1 offset 0

media label: "my_dump_media_label"

media id: 4a518062-2a8f-4f17-81fd-bb1eb2e3cb4f

xfsrestore: Restore Status: SUCCESS

The simple mode allows users to restore an entire file system from a level 0 dump. After identifying a level 0 dump's session ID (i.e. session-ID/path/to/destination

xfsrestore -f /dev/st0 -S session-ID /path/to/destination

The -f option specifies the location of the dump, while the -S or -L option specifies which specific dump to restore. The -S option is used to specify a session ID, while the -L option is used for session labels. The -I option displays both session labels and IDs for each dump.

xfsrestore Cumulative Mode

The cumulative mode of xfsrestore allows file system restoration from a specific incremental backup, i.e. level 1 to level 9. To restore a file system from an incremental backup, simply add the -r option, as in:

xfsrestore -f /dev/st0 -S session-ID -r /path/to/destination

The xfsrestore utility also allows specific files from a dump to be extracted, added, or deleted. To use xfsrestore interactively, use the -i option, as in:

xfsrestore -f /dev/st0 -i

The interactive dialogue will begin after xfsrestore finishes reading the specified device. Available commands in this dialogue include cd, ls, add, delete, and extract; for a complete list of commands, use help.

For more information about dumping and restoring XFS file systems, refer to man xfsdump and man xfsrestore.

9.8. Other XFS File System Utilities

Red Hat Enterprise Linux 6 also features other utilities for managing XFS file systems:

- xfs_fsr

Used to defragment mounted XFS file systems. When invoked with no arguments, xfs_fsr

defragments all regular files in all mounted XFS file systems. This

utility also allows users to suspend a defragmentation at a specified

time and resume from where it left off later.

In addition, xfs_fsr also allows the defragmentation of only one file, as in xfs_fsr /path/to/file. Red Hat advises against periodically defragmenting an entire file system, as this is normally not warranted.

- xfs_bmap

Prints the map of disk blocks used by files in an XFS filesystem.

This map list each extent used by a specified file, as well as regions

in the file with no corresponding blocks (i.e. holes).

- xfs_info

Prints XFS file system information.

- xfs_admin

Changes the parameters of an XFS file system. The xfs_admin utility can only modify parameters of unmounted devices/file systems.

- xfs_copy

Copies the contents of an entire XFS file system to one or more targets in parallel.

The following utilities are also useful in debugging and analyzing XFS file systems:

- xfs_metadump

Copies XFS file system metadata to a file. The xfs_metadump

utility should only be used to copy unmounted, read-only, or

frozen/suspended file systems; otherwise, generated dumps could be

corrupted or inconsistent.

- xfs_mdrestore

Restores and XFS metadump image (generated using xfs_metadump) to a file system image.

- xfs_db

Debugs an XFS file system.

For more information about these utilities, refer to their respective man pages.

Chapter 10.

Network File System (NFS)

A Network File System (NFS)

allows remote hosts to mount file systems over a network and interact

with those file systems as though they are mounted locally. This enables

system administrators to consolidate resources onto centralized servers

on the network.

This chapter focuses on fundamental NFS concepts and supplemental information.

Currently, there are three versions of NFS. NFS version 2 (NFSv2) is

older and is widely supported. NFS version 3 (NFSv3) supports safe

asynchronous writes and a more robust error handling than NFSv2; it also

supports 64-bit file sizes and offsets, allowing clients to access more

than 2Gb of file data.

NFS version 4 (NFSv4) works through firewalls and on the Internet, no longer requires an rpcbind

service, supports ACLs, and utilizes stateful operations. Red Hat

Enterprise Linux supports NFSv2, NFSv3, and NFSv4 clients. When mounting

a file system via NFS, Red Hat Enterprise Linux uses NFSv4 by default,

if the server supports it.

All versions of NFS can use Transmission Control Protocol (TCP) running over an IP network, with NFSv4 requiring it. NFSv2 and NFSv3 can use the User Datagram Protocol (UDP) running over an IP network to provide a stateless network connection between the client and server.

When using NFSv2 or NFSv3 with UDP, the stateless UDP connection

(under normal conditions) has less protocol overhead than TCP. This can

translate into better performance on very clean, non-congested networks.

However, because UDP is stateless, if the server goes down

unexpectedly, UDP clients continue to saturate the network with requests

for the server. In addition, when a frame is lost with UDP, the entire

RPC request must be retransmitted; with TCP, only the lost frame needs

to be resent. For these reasons, TCP is the preferred protocol when

connecting to an NFS server.

The mounting and locking protocols have been incorporated into the

NFSv4 protocol. The server also listens on the well-known TCP port 2049.

As such, NFSv4 does not need to interact with rpcbind[], rpc.lockd, and rpc.statd daemons. The rpc.mountd daemon is still required on the NFS server so set up the exports, but is not involved in any over-the-wire operations.

TCP is the default transport protocol for NFS version 2 and 3 under

Red Hat Enterprise Linux. UDP can be used for compatibility purposes as

needed, but is not recommended for wide usage. NFSv4 requires TCP.

All the RPC/NFS daemon have a '-p' command line option that can set the port, making firewall configuration easier.

After TCP wrappers grant access to the client, the NFS server refers to the /etc/exports

configuration file to determine whether the client is allowed to access

any exported file systems. Once verified, all file and directory

operations are available to the user.

In order for NFS to work with a default installation of Red Hat

Enterprise Linux with a firewall enabled, configure IPTables with the

default TCP port 2049. Without proper IPTables configuration, NFS will

not function properly.

The NFS initialization script and rpc.nfsd

process now allow binding to any specified port during system start up.

However, this can be error-prone if the port is unavailable, or if it

conflicts with another daemon.

10.1.1. Required Services

Red Hat Enterprise Linux uses a combination of kernel-level support

and daemon processes to provide NFS file sharing. All NFS versions rely

on Remote Procedure Calls (RPC) between clients and servers. RPC services under Red Hat Enterprise Linux 6 are controlled by the rpcbind

service. To share or mount NFS file systems, the following services

work together, depending on which version of NFS is implemented:

The portmap service was used to map

RPC program numbers to IP address port number combinations in earlier

versions of Red Hat Enterprise Linux. This service is now replaced by rpcbind in Red Hat Enterprise Linux 6 to enable IPv6 support. For more information about this change, refer to the following links:

- nfs

service nfs start starts the NFS server and the appropriate RPC processes to service requests for shared NFS file systems.

- nfslock

service nfslock start activates a mandatory service that starts the appropriate RPC processes which allow NFS clients to lock files on the server.

- rpcbind

rpcbind accepts port reservations

from local RPC services. These ports are then made available (or

advertised) so the corresponding remote RPC services can access them. rpcbind responds to requests for RPC services and sets up connections to the requested RPC service. This is not used with NFSv4.

The following RPC processes facilitate NFS services:

- rpc.mountd

This process receives mount requests from NFS clients and

verifies that the requested file system is currently exported. This

process is started automatically by the nfs service and does not require user configuration.

- rpc.nfsd

rpc.nfsd allows explicit NFS

versions and protocols the server advertises to be defined. It works

with the Linux kernel to meet the dynamic demands of NFS clients, such

as providing server threads each time an NFS client connects. This

process corresponds to the nfs service.

- rpc.lockd

rpc.lockd allows NFS clients to lock files on the server. If rpc.lockd is not started, file locking will fail. rpc.lockd implements the Network Lock Manager (NLM) protocol. This process corresponds to the nfslock service. This is not used with NFSv4.

- rpc.statd

This process implements the Network Status Monitor (NSM) RPC protocol, which notifies NFS clients when an NFS server is restarted without being gracefully brought down. rpc.statd is started automatically by the nfslock service, and does not require user configuration. This is not used with NFSv4.

- rpc.rquotad

This process provides user quota information for remote users. rpc.rquotad is started automatically by the nfs service and does not require user configuration.

- rpc.idmapd

rpc.idmapd provides NFSv4 client and server upcalls, which map between on-the-wire NFSv4 names (which are strings in the form of user@domainidmapd to function with NFSv4, the /etc/idmapd.conf must be configured. This service is required for use with NFSv4, although not when all hosts share the same DNS domain name.

10.2. NFS Client Configuration

The mount command mounts NFS shares on the client side. Its format is as follows:

mount -t nfs -o options host:/remote/export /local/directory

This command uses the following variables:

optionsserver

The hostname, IP address, or fully qualified domain name of the server exporting the file system you wish to mount

/remote/export

The file system / directory being exported from server, i.e. the directory you wish to mount

/local/directory

The client location where /remote/export should be mounted

The NFS protocol version used in Red Hat Enterprise Linux 6 is identified by the mount options nfsvers or vers. By default, mount will use NFSv4 with mount -t nfs.

If the server does not support NFSv4, the client will automatically

step down to a version supported by the server. If you use the nfsvers/vers

option to pass a particular version not supported by the server, the

mount will fail. The file system type nfs4 is also available for legacy

reasons; this is equivalent to running mount -t nfs -o nfsvers=4 host:/remote/export /local/directory.

Refer to man mount for more details.

10.2.1. Mounting NFS File Systems using /etc/fstab

An alternate way to mount an NFS share from another machine is to add a line to the

/etc/fstab

file. The line must state the hostname of the NFS server, the directory

on the server being exported, and the directory on the local machine

where the NFS share is to be mounted. You must be root to modify the

/etc/fstab file.

The general syntax for the line in /etc/fstab is as follows:

server:/usr/local/pub /pub nfs rsize=8192,wsize=8192,timeo=14,intr

The mount point /pub must exist on the client machine before this command can be executed. After adding this line to /etc/fstab on the client system, use the command mount /pub, and the mount point /pub is mounted from the server.

The /etc/fstab file is referenced by the netfs service at boot time, so lines referencing NFS shares have the same effect as manually typing the mount command during the boot process.

A valid /etc/fstab entry to mount an NFS export should contain the following information:

server:/remote/export /local/directory nfs options 0 0

The variables

server,

/remote/export,

/local/directory, and

options are the same ones used when manually mounting an NFS share. Refer to

Section 10.2, “NFS Client Configuration” for a definition of each variable.

The mount point /local/directory must exist on the client before /etc/fstab is read. Otherwise, the mount will fail.

For more information about /etc/fstab, refer to man fstab.

One drawback to using /etc/fstab is

that, regardless of how infrequently a user accesses the NFS mounted

file system, the system must dedicate resources to keep the mounted file

system in place. This is not a problem with one or two mounts, but when

the system is maintaining mounts to many systems at one time, overall

system performance can be affected. An alternative to /etc/fstab is to use the kernel-based automount utility. An automounter consists of two components:

The automount utility can mount and

unmount NFS file systems automatically (on-demand mounting), therefore

saving system resources. It can be used to mount other file systems

including AFS, SMBFS, CIFS, and local file systems.

autofs uses /etc/auto.master

(master map) as its default primary configuration file. This can be

changed to use another supported network source and name using the autofs configuration (in /etc/sysconfig/autofs) in conjunction with the Name Service Switch (NSS) mechanism. An instance of the autofs

version 4 daemon was run for each mount point configured in the master

map and so it could be run manually from the command line for any given

mount point. This is not possible with autofs

version 5, because it uses a single daemon to manage all configured

mount points; as such, all automounts must be configured in the master

map. This is in line with the usual requirements of other industry

standard automounters. Mount point, hostname, exported directory, and

options can all be specified in a set of files (or other supported

network sources) rather than configuring them manually for each host.

10.3.1. Improvements in autofs Version 5 over Version 4

autofs version 5 features the following enhancements over version 4:

- Direct map support

Direct maps in autofs provide a

mechanism to automatically mount file systems at arbitrary points in the

file system hierarchy. A direct map is denoted by a mount point of /-

in the master map. Entries in a direct map contain an absolute path

name as a key (instead of the relative path names used in indirect

maps).

- Lazy mount and unmount support

Multi-mount map entries describe a hierarchy of mount points under a single key. A good example of this is the -hosts map, commonly used for automounting all exports from a host under "/net/host" as a multi-mount map entry. When using the "-hosts" map, an 'ls' of "/net/host" will mount autofs trigger mounts for each export from host

and mount and expire them as they are accessed. This can greatly reduce

the number of active mounts needed when accessing a server with a large

number of exports.

- Enhanced LDAP support

The Lightweight Directory Access Protocol (LDAP) support in autofs version 5 has been enhanced in several ways with respect to autofs version 4. The autofs configuration file (/etc/sysconfig/autofs) provides a mechanism to specify the autofs

schema that a site implements, thus precluding the need to determine

this via trial and error in the application itself. In addition,

authenticated binds to the LDAP server are now supported, using most

mechanisms supported by the common LDAP server implementations. A new

configuration file has been added for this support: /etc/autofs_ldap_auth.conf. The default configuration file is self-documenting, and uses an XML format.

- Proper use of the Name Service Switch (

nsswitch) configuration.

The Name Service Switch configuration file exists to provide a

means of determining from where specific configuration data comes. The

reason for this configuration is to allow administrators the flexibility

of using the back-end database of choice, while maintaining a uniform

software interface to access the data. While the version 4 automounter

is becoming increasingly better at handling the NSS configuration, it is

still not complete. Autofs version 5, on the other hand, is a complete

implementation.

Refer to man nsswitch.conf for more

information on the supported syntax of this file. Please note that not

all NSS databases are valid map sources and the parser will reject ones

that are invalid. Valid sources are files, yp, nis, nisplus, ldap, and hesiod.

- Multiple master map entries per autofs mount point

One thing that is frequently used but not yet mentioned is the

handling of multiple master map entries for the direct mount point /-. The map keys for each entry are merged and behave as one map.

An example is seen in the connectathon test maps for the direct mounts below:

/- /tmp/auto_dcthon

/- /tmp/auto_test3_direct

/- /tmp/auto_test4_direct

10.3.2. autofs Configuration

The primary configuration file for the automounter is

/etc/auto.master, also referred to as the master map which may be changed as described in the

Section 10.3.1, “Improvements in autofs Version 5 over Version 4”. The master map lists

autofs-controlled

mount points on the system, and their corresponding configuration files

or network sources known as automount maps. The format of the master

map is as follows:

mount-point map-name options

The variables used in this format are:

mount-point

The autofs mount point e.g /home.

map-name

The name of a map source which contains a list of mount points,

and the file system location from which those mount points should be

mounted. The syntax for a map entry is described below.

options

If supplied, these will apply to all entries in the given map

provided they don't themselves have options specified. This behavior is

different from autofs version 4 where options where cumulative. This has been changed to implement mixed environment compatibility.

The following is a sample line from /etc/auto.master file (displayed with cat /etc/auto.master):

/home /etc/auto.misc

The general format of maps is similar to the master map, however the

"options" appear between the mount point and the location instead of at

the end of the entry as in the master map:

mount-point [options] location

The variables used in this format are:

mount-point

This refers to the autofs mount

point. This can be a single directory name for an indirect mount or the

full path of the mount point for direct mounts. Each direct and indirect

map entry key (mount-point

options

Whenever supplied, these are the mount options for the map entries that do not specify their own options.

location

This refers to the file system location such as a local file

system path (preceded with the Sun map format escape character ":" for

map names beginning with "/"), an NFS file system or other valid file

system location.

The following is a sample of contents from a map file (i.e. /etc/auto.misc):

payroll -fstype=nfs personnel:/dev/hda3

sales -fstype=ext3 :/dev/hda4

The first column in a map file indicates the autofs mount point (sales and payroll from the server called personnel). The second column indicates the options for the autofs

mount while the third column indicates the source of the mount.

Following the above configuration, the autofs mount points will be /home/payroll and /home/sales. The -fstype= option is often omitted and is generally not needed for correct operation.

The automounter will create the directories if they do not exist. If

the directories exist before the automounter was started, the

automounter will not remove them when it exits. You can start or restart

the automount daemon by issuing either of the following two commands:

service autofs start

service autofs restart

Using the above configuration, if a process requires access to an autofs unmounted directory such as /home/payroll/2006/July.sxc,

the automount daemon automatically mounts the directory. If a timeout

is specified, the directory will automatically be unmounted if the

directory is not accessed for the timeout period.

You can view the status of the automount daemon by issuing the following command:

service autofs status

10.3.3. Overriding or Augmenting Site Configuration Files

It can be useful to override site defaults for a specific mount point

on a client system. For example, consider the following conditions:

Automounter maps are stored in NIS and the /etc/nsswitch.conf file has the following directive:

automount: files nis

The auto.master file contains the following

+auto.master

The NIS auto.master map file contains the following:

/home auto.home

The NIS auto.home map contains the following:

beth fileserver.example.com:/export/home/beth

joe fileserver.example.com:/export/home/joe

* fileserver.example.com:/export/home/&

The file map /etc/auto.home does not exist.

Given these conditions, let's assume that the client system needs to override the NIS map auto.home and mount home directories from a different server. In this case, the client will need to use the following /etc/auto.master map:

/home /etc/auto.home

+auto.master

And the /etc/auto.home map contains the entry:

* labserver.example.com:/export/home/&

Because the automounter only processes the first occurrence of a mount point, /home will contain the contents of /etc/auto.home instead of the NIS auto.home map.

Alternatively, if you just want to augment the site-wide auto.home map with a few entries, create a /etc/auto.home file map, and in it put your new entries and at the end, include the NIS auto.home map. Then the /etc/auto.home file map might look similar to:

mydir someserver:/export/mydir

+auto.home

Given the NIS auto.home map listed above, ls /home would now output:

beth joe mydir